Disclaimer: This Jupyter Notebook contains content generated with the assistance of AI. While every effort has been made to review and validate the outputs, users should independently verify critical information before relying on it. The SELENE notebook repository is constantly evolving. We recommend downloading or pulling the latest version of this notebook from Github.

Building a GPT-Style LLM from Scratch¶

This notebook demonstrates how to train a GPT-style language model from scratch using the Transformer implementation provided by PyTorch. The model is trained on a toy dataset of 100,000 movie reviews, which makes it computationally manageable while still providing meaningful insights into how Transformer-based language models learn. The primary goal of this notebook is educational to help you understand the core components and workflow of training a language model, rather than to build a high-performance, state-of-the-art LLM.

The notebook begins by preparing the movie review dataset for a next-word prediction task, which is the standard training objective used in autoregressive models like GPT. This process includes tokenizing the text, constructing a vocabulary, and splitting each review into overlapping input-target pairs so the model can learn to predict the next word in a sequence. Although the dataset is small, it allows the training process to be visualized and understood step by step, without the need for massive compute resources.

Next, the notebook defines a decoder-only Transformer model by leveraging PyTorch's built-in nn.Transformer module. This section illustrates how to configure the Transformer to function in a GPT-like manner, including the use of causal masking, sinusoidal positional encodings, and a simple output projection layer for next-token prediction. Using the native PyTorch API simplifies the implementation, allowing the focus to remain on understanding how the model architecture, positional encodings, and attention mechanism work together.

Finally, the notebook walks through the training loop, showing how to compute the cross-entropy loss, perform optimization steps, and periodically evaluate the model's ability to generate coherent text continuations. While the resulting model is not designed for real-world deployment, it demonstrates the fundamental process behind GPT-style LLM training in a transparent and reproducible way. Overall, this notebook serves as a hands-on educational guide to gain practical experience with Transformer-based language models. By using PyTorch's Transformer implementation, it balances conceptual clarity with practical simplicity, offering an accessible yet technically grounded introduction to autoregressive text modeling.

Setting up the Notebook¶

Make Required Imports¶

This notebook requires the import of different Python packages but also additional Python modules that are part of the repository. If a package is missing, use your preferred package manager (e.g., conda or pip) to install it. If the code cell below runs with any errors, all required packages and modules have successfully been imported.

import sys

import numpy as np

from tqdm import tqdm

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torch.utils.data import Dataset, DataLoader

from transformers import AutoTokenizer

from src.utils.compute.gpu import *

from src.utils.data.files import *

Download Required Data¶

Some code examples in this notebook use data that first need to be downloaded by running the code cell below. If this code cell throws any error, please check the configuration file config.yaml if the URL for downloading datasets is up to date and matches the one on Github. If not, simply download or pull the latest version from Github.

movie_reviews_zip, target_folder = download_dataset("text/corpora/reviews/movie-reviews-imdb.zip")

File 'data/datasets/text/corpora/reviews/movie-reviews-imdb.zip' already exists (use 'overwrite=True' to overwrite it).

We also need to decompress the archive file.

movie_reviews = decompress_file(movie_reviews_zip, target_path=target_folder)

print(movie_reviews)

['data/datasets/text/corpora/reviews/movie-reviews-imdb.txt']

Checking & Setting Computing Device¶

PyTorch allows to train neural networks on supported GPU to significantly speed up the training process. If you have a support GPU, feel free to utilize it. However, for this notebook it's certainly not needed as our dataset is small and our network model is very simple. We provide an auxiliary method to automatically select the best device. It checks if a supported GPU is available and if so, uses it as the preferred device.

# Select preferred device (GPU, if available; CPU otherwise); you can enfore the use of the CPU

device = select_device(force_cpu=False)

print("Available device: {}".format(device))

Available device: cuda:0

Preliminaries¶

Before checking out this notebook, please consider the following:

This notebook is for education and not to build a state-of-the-art LLM. Not only is the dataset very small it also stems for a single domain: movie reviews. We also use a small version of the GPT-2 model to keep things simple and reasonably fast. In any case, do not expect human-like responses from the trained model, particularly in demo mode (see below).

We assume that you have a good understanding of the Transformer architecture, including the attention mechanisms, causal masking, and positional encodings. If not, we have various other notebooks that cover these topics in great detail.

While not strictly required, we recommend the presence of a GPU to speed up the training. However, any more modern consumer GPU supported by the PyTorch library should be fine. Even for the full training mode, the default parameters are chosen that the training will not require more than 10 GB VRAM; with 16GB slowly becoming the standard even for consumer GPUs.

You can run the notebook in demo mode by using

mode = demoin the code cell below. In demo mode, we only use 10,000 out of the 100,000 movie reviews as well as a smaller GPT-2 model. We recommend first using the demo mode to see how long the training of the model for individual epochs will require.

mode = "demo"

#mode = "full"

Dataset Preparation¶

The ACL IMDB (Large Movie Review) dataset is a popular benchmark dataset for sentiment analysis, introduced by Andrew Maas et al. (2011). It contains a total of 100,000 movie reviews collected from IMDb, with 50,000 reviews being labeled as either positive or negative and evenly split into 25,000 reviews for training and 25,000 for testing. For training Word2Vec word embeddings we do not need the sentiment labels. We therefore already preprocessed the original dataset such that all 100,000 reviews are in a single file, with 1 line = 1 review. This preprocessing included the removal of any HTML tags and line breaks.

For the training of our model, we make the common assumption that the training data is structured as continuous streams of documents, with each movie review representing a document. Document streams refer to the way text data is fed into the model during training: not as isolated documents, but as a continuous stream of text. Instead of treating each document as an independent unit, the training corpus is viewed as a long sequence of tokens coming from many documents concatenated together. This approach allows the model to learn language patterns and long-range dependencies across boundaries that would otherwise be artificially imposed by document splits. The training process still ensures that context resets appropriately between documents (e.g., by inserting special end-of-document tokens [EOS]), but from the model's perspective, the data flows in a seamless stream. Thus, assuming $\text{doc}_{i}$ is a list of tokens represents the $i$-th documents, our document stream has the following format:

In practice, our training data may consist of many such document streams so that each stream can fit into memory. However, here we work with a small example data for educational purposes. This includes that the stream of tokens can be represented by an array that fits completely into memory, therefore avoiding any more sophisticated strategies requiring the splitting of the dataset, etc.

Document streaming is essential for efficient batching and scaling when training on massive corpora. LLMs are typically trained on trillions of tokens spread across billions of documents, and it’s computationally impractical to load entire documents or reinitialize contexts for each. Instead, data pipelines tokenize all documents in advance, concatenate them, and then dynamically sample fixed-length chunks (e.g., 1024 tokens) to feed into the model. This "streaming" setup keeps GPUs fully utilized and allows distributed training systems to continuously stream data without needing to restart or reshuffle individual documents frequently.

Load Reviews from File¶

In the setup section of the notebook, we already downloaded the file containing all 100,000 movie reviews. In the following code cell, simply counts the number of reviews by containing the number of each line in the file, just to check if the dataset is complete. Note that we have to write movie_reviews[0] since movie_reviews is a list of files — it just so happens that the list contains only one file.

total_reviews = sum(1 for _ in open(movie_reviews[0]))

print(f"Total number of reviews (1 review per line): {total_reviews}")

Total number of reviews (1 review per line): 100000

Although we have a total 100,000 reviews (each containing multiple sentences), we consider only 10,000 reviews in demo mode to speed up the training. However, you can edit the code cell below to increase or decrease the number of considered reviews. For a first run, we recommend sticking to 10,000 reviews to execute and understand the code.

if mode == "demo":

num_considered_reviews = 10_000

else:

num_considered_reviews = 100_000

num_reviews = min(total_reviews, num_considered_reviews)

print(f"Number of reviews used for training dataset: {num_reviews}")

Number of reviews used for training dataset: 10000

Tokenize & Generate Token Stream¶

Each document (i.e., each movie review) is represented as a string. We therefore have to tokenize each review and represent each using a unique token index. In principle, we could implement both steps on our own (e.g., tokenizing using spaCy and creating a vocabulary for creating the token indices). However, to focus on the LLM training, we used the pretrained GPT-2 tokenizer to handle both the tokenization and indexing for us. The GPT-2 tokenizer is a subword tokenizer based on the Byte-Pair Encoding (BPE) algorithm.

In nutshell, BPE (and similar approaches) learns how to tokenize words from data. Instead of splitting text strictly into full words or individual characters, BPE breaks words into frequently occurring subword units. For example, "unhappiness" might become "un", "happi", and "ness". This enables the model to represent common words as single tokens while decomposing rare words into smaller, reusable pieces, preventing the out-of-vocabulary (OOV) problem that word-level tokenizers suffer from. Because subword tokens capture meaningful morphological patterns (prefixes, suffixes, roots), models can infer the meaning of new or compound words based on familiar subparts. This reduces the total number of parameters needed for embeddings and improves the model's ability to generalize to unseen text. You can learn all about BPE in a separate notebook

To load the pretrained GPT-2 tokenizer, we can use the AutoTokenizer class Hugging Face Transformers library. This class is a high-level interface that automatically selects the appropriate tokenizer for a given pretrained model, abstracting away the need to know the specific tokenizer class. The from_pretrained method is used to load a tokenizer that has already been trained on a specific model's vocabulary and tokenization rules, either from the Hugging Face model hub or a local path. This method ensures that the tokenizer is configured consistently with the pretrained model, including token-to-ID mappings, special tokens, and any subword tokenization schemes (e.g., BPE), making it ready for encoding text into token IDs suitable for model training or inference. The code cell below used the class and method to load the GPT-2 tokenizer.

tokenizer = AutoTokenizer.from_pretrained("gpt2")

Recall that for our document stream, we need to indicate when one movie review ends and another review starts using some [EOS] (end-of-sequence) token. However, we cannot simply define our own unique token but must use a token that is known to the tokenizer, i.e., the token is part of the existing vocabulary. Most tokenizers include a small set of special tokens to indicate the end of a sequence, the beginning of a sequence, padding tokens, masked tokens, etc. — all depending on the data and learning task.

We can check the special_tokens_map of the GPT-2 tokenizer which special tokens it supports:

tokenizer.special_tokens_map

{'bos_token': '<|endoftext|>',

'eos_token': '<|endoftext|>',

'unk_token': '<|endoftext|>'}

We can see that the GPT-2 tokenizer recognizes only one special token: <|endoftext|>. Since GPT-2 was also trained on a document stream, we only need a single token acting as a separator between documents, which could be either the [EOS] or [BOS] token. The GPT-2 tokenizer also does not require a dedicated [UNK] (unknown) token, since BPE just tokenizes unknown words into known subwords or even just characters, if needed.

Let's define this <|endoftext|> token as a constant for creating our document stream.

EOS_TOKEN_GPT2 = "<|endoftext|>"

With the tokenizer, we can now go through all movie reviews (or the maximum number of reviews specified) and tokenize them; see the code cell below. Notice that the preprocess each review before tokenizing by removing any newline characters, converting all words to lowercase, and adding the special [EOS] token at the end.

Lowercasing all words when training a language model on a small dataset helps reduce the vocabulary size and simplify the learning problem, which is especially important when data is limited. In small datasets, treating "Apple" and "apple" as different tokens would split their occurrences across two separate representations, weakening the model's ability to learn consistent word meanings. By lowercasing, all variants are merged into a single token, allowing the model to see more examples of each word and thus learn better statistical patterns. This normalization step reduces data sparsity, speeds up convergence, and makes the model's limited capacity and training data more effectively focused on learning core linguistic relationships rather than case variations. And again, our goal is very far from building state-of-the-art LLM in this notebook.

tokens = []

with open(movie_reviews[0]) as file:

for idx, review in enumerate(tqdm(file, total=num_reviews, leave=False)):

if idx >= num_reviews:

break

tokens.extend(tokenizer.encode(f"{review.strip().lower()} {EOS_TOKEN_GPT2}", truncation=True, max_length=sys.maxsize))

print(f"Total number of tokens: {len(tokens)}")

Total number of tokens: 2922984

Create Dataset and DataLoader¶

In PyTorch, the Dataset class is an abstraction that defines how data is accessed and preprocessed for training. It provides a consistent interface to load individual samples and their labels through the methods __len__() and __getitem__(). This allows you to wrap any type of data (text, images, tabular data, etc.) into a standardized format that PyTorch models can easily consume. The DataLoader class then builds on top of this by handling the efficient batching, shuffling, and parallel loading of data samples from a Dataset. It automatically groups multiple samples into mini-batches and can use multiple worker processes to load data in parallel, ensuring that the GPU remains fully utilized during training.

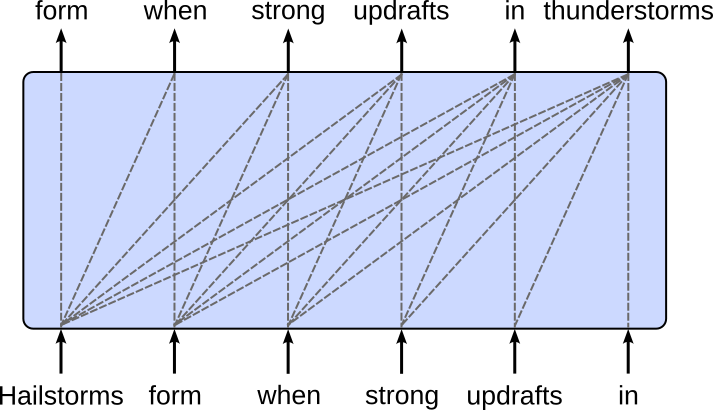

For creating our Dataset instance, recall that GPT-style LLMs are trained based on the next-word prediction task — given a sequence of words which is next likely word to follow. The figure below shows the required training setup for the Transformer decoder. The target sequence is (almost) the same as the input sequence, only 1 token shifted to the left. Note that the dashed line represents the causal masking where the prediction of a token only depends on preceding tokens but not "future" tokens — recall that the decoder processes all tokens in parallel during training, so we need to mask the attention between a token and all tokens preceding it.

Of course, we cannot give the whole sequence of tokens to the model at once. When training LLMs, the context size, i.e., the number of tokens the model can attend to at once, is typically fixed to a maximum value due to both computational and architectural constraints. Transformer models compute attention across all token pairs within a sequence, which scales quadratically with sequence length in both memory and computation cost. This means that doubling the context size roughly quadruples the resources required per training step. To make training feasible on available hardware, a practical upper bound (e.g., 512, 1024, or 4096 tokens) is chosen so that the model can learn meaningful dependencies without exhausting GPU memory or dramatically slowing training.

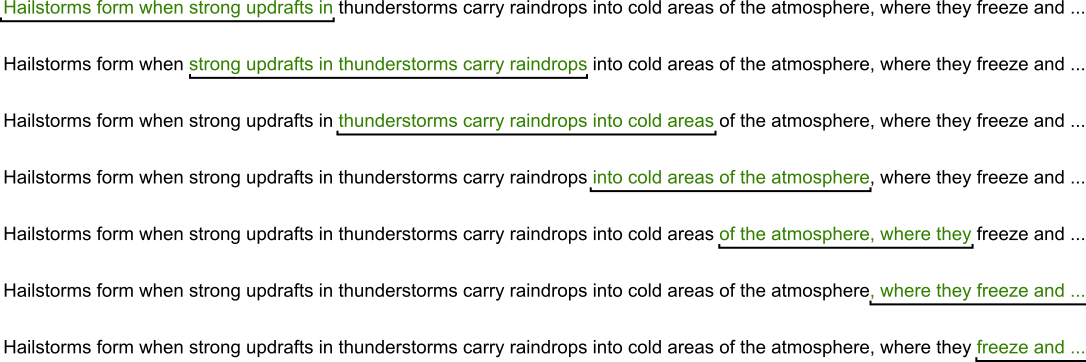

We therefore have to feed the model all tokens in chunks. In this notebook, we use a common sliding window approach that forms a chunk of a fixed size. More specifically, we use a sliding window with a 50% overlap — see the example in the figure below. In this simple example, the context size is 6 tokens, meaning that an overlap of 50% means that the last 3 tokens of the current chunk will be the first 3 tokens of the next chunk.

Both the sliding window approach as well as the generation of the target sequences by shifting the input sequences 1 token to the left is very straightforward. The class CausalLMDataset in the code cell below implements both steps as a custom Dataset class. The max_len parameters specify the maximum context size, and the optional stride parameter specifies by how many tokens the slides window should be moved each time. If stride=None move the window by the whole context size, thus resulting in chunks without overlap.

class CausalLMDataset(Dataset):

def __init__(self, tokens, max_len=128, stride=None):

self.input_ids = []

self.target_ids = []

if stride is None:

stride = max_len

for i in range(0, len(tokens)-max_len, stride):

self.input_ids.append(torch.LongTensor(tokens[i:(i+max_len)]))

self.target_ids.append(torch.LongTensor(tokens[(i+1):(i+max_len+1)]))

def __len__(self):

return len(self.input_ids)

def __getitem__(self, idx):

return self.input_ids[idx], self.target_ids[idx]

Let's create an instance of this CausalLMDataset by passing our list of tokens as input. Throughout the notebook, we use a context size of 128 tokens by default. Since we aim for a 50% overlap between chunks, we set stride to half the context size.

context_size = 128

dataset = CausalLMDataset(tokens, max_len=context_size, stride=context_size//2)

Lastly, we can create a DataLoader instance that handles all the batching and shuffling of the samples for us.

loader = DataLoader(dataset, batch_size=64, shuffle=True, drop_last=True)

With the data loader, we are now ready to train our model. Again, keep in mind that the data preparation was rather simple since we only work with a very small dataset — at least very small in the context of training an LLM — so that the complete dataset fits into memory of single machine — which is sufficient for our purpose here.

Auxiliary Methods¶

For the model training and the very crude qualitative evaluation of the model (discussed later), we next define a few auxiliary models for a cleaner code but also support strategies such as checkpointing for training large models in practice.

Training a Single Epoch¶

The method train_epoch() takes a data loader, a model and an optimizer to train the model for a single epoch by iterating over all batches of the data loader. Each batch — composed of input and all target sequences — is passed to the model to compute the model output (i.e., the logits) and the loss (based on the loss function defined as part of the model). For this method, we only need the loss to perform backpropagation and update the model weights based on the strategy of the optimizer. During the epoch, the method accumulates the losses of each batch to a final epoch loss, which is then returned from the method.

def train_epoch(loader, model, optimizer, description):

epoch_loss = 0.0

model_device = next(model.parameters()).device

for idx, (inputs, targets) in enumerate(tqdm(loader, desc=description, leave=False)):

# Move current batch to GPU, if available

inputs, targets = inputs.to(model_device), targets.to(model_device)

# Calculate loss

logits, loss = model(inputs, targets)

# Reset the gradients from previous iteration

model.zero_grad()

# Calculate new Gradients using backpropagation

loss.backward()

# Update all trainable parameters (i.e., the theta values of the model)

optimizer.step()

# Update epoch loss

epoch_loss += loss.item()

return epoch_loss

When later training a model over several epochs, we can simply repeatedly call this method to handle training of a single epoch.

Saving & Loading Checkpoints¶

Training a Transformer-based LLM requires a lot of training, even for a rather small model trained using a rather small dataset. A checkpoint in model training is a saved snapshot of the model's state at a specific point during training, typically after a certain number of steps or epochs. It usually includes the model's parameters (weights and biases), the optimizer state (to resume learning with the same momentum and learning rate adjustments), and sometimes metadata like the current epoch or training loss. This allows training to be paused and later resumed from that point without starting over, which is especially important for large models that take days or weeks to train.

While many libraries have built-in support for periodically saving checkpoints, in this notebook, we purposefully use only PyTorch and avoid libraries with a higher level of abstraction. However, saving a checkpoint is very straightforward. The method save_checkpoint() defined in the code cell below takes a model and optimizer instance, as well as the information about the current epoch and epoch loss. The methods the combines all required information to resume training into a single object and uses the save() method of PyTorch to save this object to a file.

In PyTorch, the state_dict() object is a Python dictionary that contains all the learnable parameters and persistent states of a model or optimizer. For models, it stores mappings from each layer's name to its corresponding tensor values (like weights and biases). For optimizers, it includes the current state of optimization variables such as momentum buffers and learning rate schedules. This dictionary enables easy saving, loading, and transferring of model and optimizer states, making it central to checkpointing and model deployment. By calling torch.save(model.state_dict()), you can preserve a model's learned parameters, and later restore them with model.load_state_dict(), ensuring the model continues exactly where it left off.

def save_checkpoint(model, optimizer, epoch, loss, path="checkpoint.pt"):

checkpoint = {

"epoch": epoch,

"model_state_dict": model.state_dict(),

"optimizer_state_dict": optimizer.state_dict(),

"loss": loss,

}

torch.save(checkpoint, path)

print(f"Checkpoint saved at {path}")

Naturally, the counterpart to saving a checkpoint is loading a save checkpoint, as implemented by the method load_checkpoint() below. Notice that this method also takes in a model and optimizer instance. In other words, the method does not create a model or optimizer but sets the state of both instances as the states read from the checkpoint file. This of course only works if the model and the optimizer have the same "structure" as the model and optimizer used for training. For example, we cannot train a Transformer model with 4 layers and then load its state into a model with more or less layers.

Also, notice the map_location parameter of PyTorch's load() method. This parameter controls how tensors are remapped to devices (like CPU or GPU) when loading a saved model or checkpoint. This is useful when the model was trained on one device but needs to be loaded on another; for example, loading GPU-trained weights onto a CPU-only machine. By specifying map_location='cpu', all tensors are loaded to the CPU regardless of where they were originally saved, while map_location='cuda:0' loads them to the first GPU. It can also take a custom function or dictionary to flexibly remap devices, ensuring model compatibility across different hardware setups and preventing errors caused by unavailable devices.

def load_checkpoint(model, optimizer, path="checkpoint.pt", device="cuda"):

checkpoint = torch.load(path, map_location=device)

model.load_state_dict(checkpoint["model_state_dict"])

optimizer.load_state_dict(checkpoint["optimizer_state_dict"])

epoch = checkpoint["epoch"]

loss = checkpoint["loss"]

print(f"Checkpoint loaded (epoch {epoch}, loss {loss:.4f})")

return epoch, loss

Generate & Save Example Responses¶

When training any kind of model, we typically track the progress by measuring some form of validation loss over time. However, here we keep it very simple and omit the consideration of a separate validation dataset to compute a validation loss after each epoch. After all, the loss itself does not really tell us how well the model is performing. In contrast, we first define the method generate_response() that returns the response generated by a model for a given prompt. Notice that this method also needs the tokenizer to encode the prompt and decode the generated response.

def generate_response(prompt, tokenizer, model, max_new_tokens=50):

model_device = next(model.parameters()).device

prompt_encoded = tokenizer.encode(prompt, return_tensors="pt").to(model_device)

generated = model.generate(prompt_encoded, max_new_tokens=max_new_tokens)

output_text = tokenizer.decode(generated[0], skip_special_tokens=True)

return output_text

Similar to saving checkpoints when training the model for a long time, we might also want to save the generated responses for some prompts, e.g., after each epoch. This allows us to later check the generated files, how the quality of the generated responses have changed over time. The method generate_example_responses() implements this idea using three simple example prompts related to movie reviews; of course, we can edit the prompts or additional ones. All generated responses are stored in a file given the specified file name.

def generate_example_responses(tokenizer, model, path="example-responses.txt"):

prompts = ["the best part of the movie was", "my favorite scene of the in the movie", "the script and the direction"]

with open(path, "w") as file:

for prompt in prompts:

response = generate_response(prompt, tokenizer, model)

file.write(f"{response}\n\n")

In the full training mode, we save checkpoints and example responses to disk. Using the code cell below, you can create a folder into which the checkpoint and output files are stored. You can customize the path to fit your local setup.

folder = create_folder("data/generated/models/gpt2/")

print(folder)

data/generated/models/gpt2/

Creating the Model¶

The GPT-2 model is a decoder-only Transformer architecture because — that is, it is designed specifically for autoregressive language modeling, where the goal is to predict the next token in a sequence based solely on previous tokens. Unlike the original Transformer, which uses an encoder–decoder structure for sequence-to-sequence tasks such as translation, GPT-2 discards the encoder and retains only the decoder blocks, each composed of self-attention and feedforward layers. Importantly, it applies a causal masking, ensuring that each token can only attend to earlier positions, thereby preserving the left-to-right generation order required for text prediction. This simplified, decoder-only design makes GPT-2 efficient for generative tasks while maintaining the full expressive power of the Transformer architecture.

Positional Encoding¶

During training and inference, the transformer processes an input sequence in parallel by computing the alignment scores between all pairs of input tokens as part of the attention mechanism. This includes that the Transformer has no built-in mechanism to capture the position of tokens. However, for properly understanding language and text, word/token order matters. This is where positional encodings come into play. Similar to word embedding vectors, a positional encoding refers to vectors that capture positional information of tokens. We have several dedicated notebooks that talk about the importance of positional encodings and common strategies.

In this notebook, we adopt the positional encodings proposed in the original Transformer paper "Attention Is All You Need". In a nutshell, these absolute positional encodings are added to the input embeddings at the bottom of the encoder and decoder stacks, allowing the model to leverage both token identity and position. Rather than learning these positions as parameters, the paper proposed a fixed sinusoidal encoding scheme based on sine and cosine functions of varying frequencies. The sinusoidal formulation ensures that each position in the sequence is mapped to a unique vector, while also enabling the model to generalize to sequence lengths longer than those seen during training. Specifically, for each position $pos$ and embedding dimension $i$, the encoding is defined as:

This design produces smooth, continuous patterns that encode both absolute position and relative distance information through the phase difference between positions, providing the Transformer with a simple yet effective way to model sequential structure without relying on recurrence or convolution. Again, we cover this approach in more detail in its own notebook.

The class PositionalEncoding in the code cell below implements this sinusoidal encoding strategy. The advantage of using absolute positional encodings is that all vectors containing fixed values can be computed a-priori, and then just added to the word embedding vectors during training and inference. By using the register_buffer() method, we can specify that the positional encodings are part of the model but should not be treated as learnable parameters, and therefore will not be updated by the optimizer. However, we have to make the encodings part of the model's state so that they are included when saving the model when saving checkpoints.

class PositionalEncoding(nn.Module):

def __init__(self, emb_size: int, dropout: float, maxlen: int = 1024):

super(PositionalEncoding, self).__init__()

den = torch.exp(- torch.arange(0, emb_size, 2)* np.log(10000) / emb_size)

pos = torch.arange(0, maxlen).reshape(maxlen, 1)

pos_embedding = torch.zeros((maxlen, emb_size))

pos_embedding[:, 0::2] = torch.sin(pos * den)

pos_embedding[:, 1::2] = torch.cos(pos * den)

pos_embedding = pos_embedding.unsqueeze(-2)

self.dropout = nn.Dropout(dropout)

self.register_buffer('pos_embedding', pos_embedding)

def forward(self, token_embedding):

return self.dropout(token_embedding + self.pos_embedding[:token_embedding.size(0), :])

Transformer Model¶

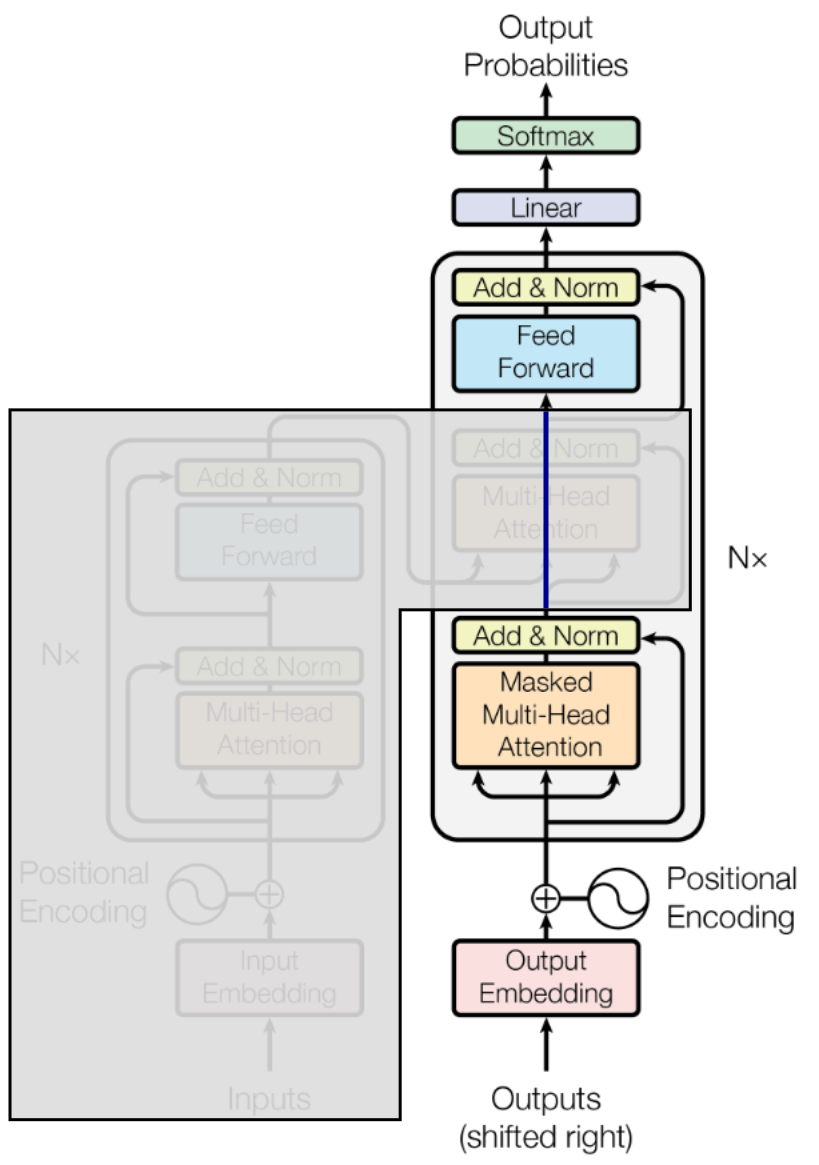

GPT-2, like many other popular LLMs, is a so-called decoder only model. This not only means that the model uses only the decoder of the overall architecture but also that the decoder is missing the cross-attention layer that aligns the encoder output with the decoder input. The figure below illustrates this idea by shading out all the parts of the complete Transformer architecture not being used for training a decoder-only LLM such as GPT-2.

To implement our model, we can directly use classes implementing the Transformer architecture provided by PyTorch. This includes the nn.TransformerDecoder class implementing the decoder part of the Transformer. However, the problem is that this class expects as input the output of a decoder, which we obviously do not have in our decoder-only setup. However, if you look at the figure above, notice that the decoder without the cross-attention block is the same as the encoder. Thus we can simply use the nn.TransformerEncoder class. We only have to keep in mind to still use a causal mask during training and inference to stop the model to "look into the future".

The class GPT2LanguageModel below implements the complete model. Apart from the size of the vocabulary size, the input parameters of the constructor specify the different parameters of the encoder layer incl. the number of attention heads and the number of layers. The internal method _generate_square_subsequent_mask() precomputes that causal mask for the maximum context size (i.e., the maximum length of the input sequence). Again, since the mask contains fixed values and not trainable parameters, we use register_buffer() to add the mask to the model's state. Overall this class is essentially a 1:1 implementation of the figure above.

class GPT2LanguageModel(nn.Module):

def __init__(self, vocab_size, d_model=512, n_heads=8, num_layers=6, context_size=128, dropout=0.1):

super().__init__()

self.vocab_size = vocab_size

self.d_model = d_model

self.context_size = context_size

# Token and position embedding layers

self.token_embed = nn.Embedding(vocab_size, d_model)

self.pos_embed = PositionalEncoding(d_model, dropout=dropout)

# Transformer encoder = decoder without cross-attention block

encoder_layer = nn.TransformerEncoderLayer(

d_model=d_model,

nhead=n_heads,

dim_feedforward=4*d_model,

dropout=dropout,

activation="gelu",

batch_first=True

)

self.transformer = nn.TransformerEncoder(encoder_layer, num_layers=num_layers)

# Output project from the embedding size to the vocabulary size

self.lm_head = nn.Linear(d_model, vocab_size, bias=False)

# Create causal mask and initialize weights

self.register_buffer("mask", self._generate_square_subsequent_mask(context_size))

self._init_weights()

def _init_weights(self):

nn.init.normal_(self.token_embed.weight, mean=0.0, std=0.02)

nn.init.normal_(self.lm_head.weight, mean=0.0, std=0.02)

def _generate_square_subsequent_mask(self, sz):

mask = torch.triu(torch.ones(sz, sz), diagonal=1).bool()

return mask

def forward(self, input_ids, targets=None):

# Get batch size B and sequence length T from inputs

B, T = input_ids.shape

device = input_ids.device

# Generate combined token and position embedding vectors

x = self.pos_embed(self.token_embed(input_ids))

# Create required causal mask by trimming precomputed mask to current input length

causal_mask = self.mask[:T, :T].to(device)

# Pass inputs and mask to transformer

x = self.transformer(x, mask=causal_mask)

# Compute output logits

logits = self.lm_head(x) # (B, T, vocab_size)

loss = None

# If targets are available (during training), compute loss

if targets is not None:

loss = F.cross_entropy(

logits.view(-1, self.vocab_size),

targets.view(-1),

ignore_index=-1,

)

# Return logits (for inference) and the loss (for training)

return logits, loss

@torch.no_grad()

def generate(self, input_ids, max_new_tokens=50, temperature=1.0, top_k=10):

for _ in range(max_new_tokens):

if input_ids.size(1) > self.context_size:

input_ids = input_ids[:, -self.context_size:]

logits, _ = self(input_ids)

logits = logits[:, -1, :] / temperature # focus on last token

# Top-k filtering

if top_k is not None and top_k < logits.size(-1):

topk_vals, topk_idx = torch.topk(logits, top_k)

probs = F.softmax(topk_vals, dim=-1)

next_token = topk_idx.gather(

1, torch.multinomial(probs, num_samples=1)

)

else:

# fallback to full softmax sampling

probs = F.softmax(logits, dim=-1)

next_token = torch.multinomial(probs, num_samples=1)

input_ids = torch.cat([input_ids, next_token], dim=1)

return input_ids

The class GPT2LanguageModel also implements the generate() methods to, given a prompt in terms of a list input_ids of token indices, generate a response by predicting the next most likely token over and over. By default, the next token is sampled from the top_k=10 tokens with the highest scores (logits). The temperature parameter is another way to control how deterministically or how random the next token is sampled from the top-k tokens.

We can now create the instance for the model and the optimizer to start the training. Again, we go with a smaller model for the demo mode, where smaller refers to smaller embedding sizes, fewer attention heads, and few encoder layers. Notice that you are unlikely to see great results in terms of human-like responses, particularly when only for 5 epochs by default; see further below. However, you should be able to see responses that are often piece-wise coherent.

if mode == "demo":

model = GPT2LanguageModel(tokenizer.vocab_size, d_model=128, n_heads=4, num_layers=3).to(device)

else:

model = GPT2LanguageModel(tokenizer.vocab_size, d_model=256, n_heads=8, num_layers=6).to(device)

optimizer = optim.AdamW(

model.parameters(),

lr=3e-4, # initial learning rate

betas=(0.9, 0.95), # GPT-2 and many LM use this instead of (0.9, 0.999)

weight_decay=0.1 # encourages generalization

)

print(model)

GPT2LanguageModel(

(token_embed): Embedding(50257, 128)

(pos_embed): PositionalEncoding(

(dropout): Dropout(p=0.1, inplace=False)

)

(transformer): TransformerEncoder(

(layers): ModuleList(

(0-2): 3 x TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): NonDynamicallyQuantizableLinear(in_features=128, out_features=128, bias=True)

)

(linear1): Linear(in_features=128, out_features=512, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=512, out_features=128, bias=True)

(norm1): LayerNorm((128,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((128,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

)

)

(lm_head): Linear(in_features=128, out_features=50257, bias=False)

)

Before we start training, let's quickly check what the model would generate for a given prompt right now. In demo mode, we simply print the response as the output of the code cell below. Otherwise, we use our auxiliary generate_example_responses() method to save the responses of our example prompts to a file for later inspection.

if mode == "demo":

prompt = "the best part of the movie was"

print(generate_response(prompt, tokenizer, model))

else:

generate_example_responses(tokenizer, model, path=f"{folder}example-responses-{0}.txt")

the best part of the movie was MEN pipeiopCong YOU thruiopiopaks YOUiop Points childcare YOU foreground Vanilla childcareESPN textures textures Points barrier textures Herman bathroomsiop erasediopESPNaks childcare MEN MEN beginner MEN thru motivate bandampire boiler barrieraksaks MEN Points cannonaks thru Hockey childcare

Unsurprisingly, without training, the response is complete gibberish beyond the tokens for the given prompt. However, you can use these initial results to compare it with the response after even just the first epoch.

Model Training¶

Using our auxiliary method train_epoch(), the code for training our model for several epochs becomes very simple; see the code cell below. In demo mode, we simply print the response of the model for our example prompt (see above) after each epoch. In the full training mode, we save a checkpoint as well as example response to disk after each epoch. For an initial run, even in demo mode, you can also change the number of epochs to $1$, just to see how long training an epoch will require.

Important: While the code below saves a checkpoint after each epoch in full training mode, it does not directly resume training after a problem by automatically loading the last checkpoint; notice that the method load_checkpoint() is actually nowhere used in the notebook. This is to keep the code as simple and clean as possible. Also, even in full training mode — and assuming a decent consumer GPU — the training time is measured in very few hours instead of days.

num_epochs = 5

for epoch in range(num_epochs):

description = f"Epoch {epoch+1}/{num_epochs}"

epoch_loss = train_epoch(loader, model, optimizer, description)

#

if mode == "demo":

print(generate_response(prompt, tokenizer, model))

else:

save_checkpoint(model, optimizer, epoch+1, epoch_loss, path=f"{folder}checkpoint-{epoch+1}.pt")

generate_example_responses(tokenizer, model, path=f"{folder}example-responses-{epoch+1}.txt")

print(f"Done training {num_epochs} epochs.")

the best part of the movie was a few, and it. there's been a movie. i can't have been an lot of the film that and i can be the movie was a very very very bad. the movie to get the first movie. it was a very film.

the best part of the movie was in a film. this movie. there are so bad movie is just the movie. i can make up to be a few minutes. it's no sense of the story, but this. the film is a film, and one of the end,

the best part of the movie was a film was to me of the movie. it's really just a film. but i've been funny. it. i was a movie are a bad, but this movie is a great movie that the "i am not even

the best part of the movie was the movie is bad. the movie was a good. this movie was not to the characters and you have the movie i was the movie was a bad, it was the movie was so stupid. this is that the worst. i had a

the best part of the movie was the plot, but they could have been a few other than it was not to do not as it is a lot better. it just to the worst film was made. it was a film. it is no sense. and the worst is the acting Done training 5 epochs.

In demo mode and after only a handful of epochs, you should be able to see the responses slowly become better, with short phrases becoming more and more coherent. However, getting the model to generate fluent and coherent response across the whole sequences requires a substantial amount of training and a sufficiently large model.

Summary¶

Training a GPT-style language model from scratch provides valuable insight into the core components and workflow that underlie modern large language models. Even when using a small, toy dataset, the process mirrors the same fundamental principles used to train state-of-the-art systems. The training begins with preparing a text corpus for next-word prediction, the central objective of autoregressive language models. This involves tokenizing the text, building a vocabulary, and generating input-target pairs where the model learns to predict the next token in a sequence based on the preceding ones.

At the heart of the model lies the Transformer architecture, which uses stacked layers of self-attention and feedforward networks to model long-range dependencies in text. In a GPT-style (decoder-only) setup, the Transformer employs masked self-attention, ensuring that predictions for a given position depend only on earlier tokens in the sequence. Positional encodings—often fixed sinusoidal functions—are added to the token embeddings to give the model a sense of order, since attention itself has no inherent notion of sequence. These design elements together enable the model to capture complex syntactic and semantic relationships between words.

The training process typically involves minimizing a cross-entropy loss that measures how well the model predicts the next token. Through repeated optimization steps, the model gradually improves its internal language representations. While a small model trained on limited data will not produce fluent or generalizable text, it still exhibits the same learning dynamics observed in much larger systems, offering a practical and interpretable context for understanding how Transformers learn.

In practice, training competitive LLMs requires vastly larger datasets, model sizes, and computational resources, often spanning hundreds of billions of tokens and thousands of GPUs. However, the overall workflow remains fundamentally the same — from data preparation and tokenization to model design, loss computation, and iterative optimization. By experimenting with small-scale GPT models, one can grasp the essential mechanics that scale up directly to real-world LLMs, providing a solid conceptual foundation for understanding the architecture and training pipeline behind today’s leading generative models.